- Install spark on windows and run scala programs mac os x#

- Install spark on windows and run scala programs download#

But if you want to try Scala in an interactive way, you can download the latest Scala binary for Windows from the download page of scala and keep track where you installed it, such as C:Program Files (x86)scala.

Install spark on windows and run scala programs mac os x#

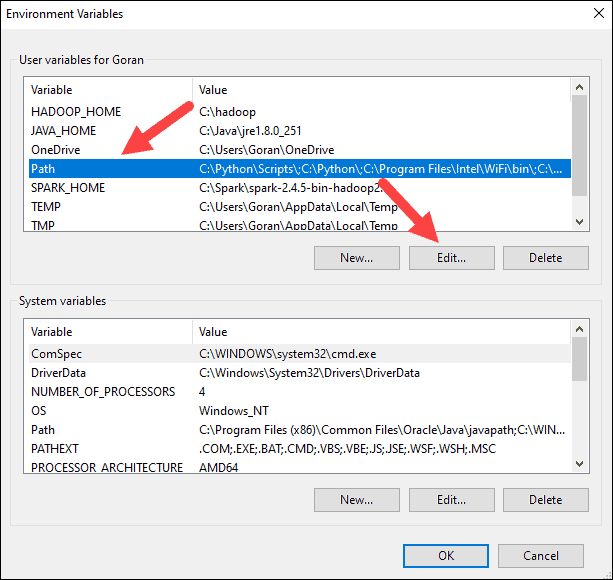

Throughout this book we will be using Mac OS X El Capitan, Ubuntu as our Linux flavor, and Windows 10 all the examples presented should run on either of these machines. Install Scala (optional) Because the Scala IDE includes Scala versions, it is optional to install Scala programming language locally. In the lower-right corner, click Environment Variables and then click New in the next window. Spark installs Scala during the installation process, so we just need to make sure that Java and Python are present on your machine. Select the result labeled Edit the system environment variables. Now is the step to count the number of words -. It allows you to run the Spark shell directly from a command prompt window. scala> val inputfile sc.textFile ('input.txt') On executing the above command, the following output is observed. You should specify the absolute path of the input file. Create a plugins.sbt file in /SimpleApp/project/ĪddSbtPlugin(“” % “sbteclipse-plugin” % “5.2. Let’s create a Spark RDD using the input file that we want to run our first Spark program on.

ALL "sbt" COMMANDS NEED TO BE RUN FROM /SimpleApp dir level LibraryDependencies += "" %% "spark-sql" % "2.2.1" task with Scala, Python and R interfaces, that provides an API integration to process massive distributed processing over. Create build.sbt file in /SimpleApp dir with below content. Mkdir src/test/java, src/test/resources, src/test/scalaĮnsure the scala version & Spark version used in below matches exactly what you see while running “spark-shell” command. To experiment with Spark and Python (PySpark or Jupyter), you need to install both. For those who want to learn Spark with Python (including students of these BigData classes), here’s an intro to the simplest possible setup. It is written in Scala, however you can also interface it from Python. Apache Spark Scala Installation, In this tutorial one can easily know the information about Apache Spark Scala Installation and Spark Scala setup on Ubuntu and windows which are available and are used by most of the Spark developers. Mkdir src/main/java ,src/main/resources ,src/main/scala Apache Spark is the popular distributed computation environment. Apache Spark Scala Installation on Ubuntu and Windows. #Create folder structure within "SimpleApp" dir

If you just want to run Scala codes, then above prerequisites are enough. In my system Java Home is: C:Program FilesJavajre 2. Make sure that JRE is available in your machine and it’s added to the PATH environment variable. Download and install Scala plugin for eclipse. Steps to set Jupyter Notebook to run Scala and Spark. For our Apache Spark environment, we choose the jupyter/pyspark-notebook, as we don’t need the R and Scala support.

0 kommentar(er)

0 kommentar(er)